Data releases are wonderful because they give true information about a topic, often replacing anecdotal evidence that depends more on who we know than the true state of a Thing. My thoughts on financial success after college depend partly on if I hung out with aspiring educators vs management consultants, for example. Hearing that one of your friends had a great experience at College X might lead you to recommend it, but there's a chance that your friend's experience was one of the rare positive ones. I am all for releasing data.

However, in looking at data, we still need to apply context and analysis. Data depends on a varsity of factors, such as the group being examined or the method used to collect it. College ranking data is especially scary when used long-term because the selective colleges being examined have a lot of control, though their admissions and administrative processes, over some of the data inputs. For example, US News looks at the % of classes with under 20 students - and I'm not sure its a coincidence that some college cap certain classes at exactly 19 students.

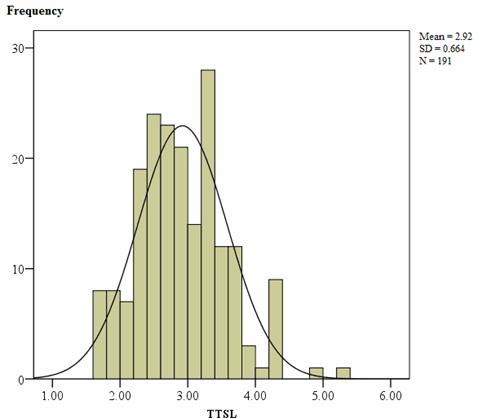

In a world without manipulation, the US News datapoint could be used to interpolate a bell curve of class sizes. Instead, I suspect that colleges work to shove as many classes as possible under that 19-person cap - meaning that a college with a mix of 10 person and 15 person classes ends up looking equivalent to a college full of 19 person classes, even though the 10 person experience is much more rewarding (in my anecdotal experience).

|

| Example of a bell curve with a spike at one level |

The worst implication of using and relying on data like this is that the attributes that make a strong college cohort may end up producing data that looks "worse" than other cohorts - meaning that colleges that seek to do the best job possible have an incentive to manipulate their incoming classes to have stronger data.

For example, a college that wants to produce graduates with higher incomes after 10 years might have an incentive to take more students interested in economics, technology, or engineering - disadvantaging those interested in other fields. Colleges interested in keeping a higher graduation rate might shy away from admitting anyone with mental health struggles or other risk factors that could lead them not to graduate - regardless of a college's strength. Finally, colleges interested in reporting a high amount of average aid could seek more students who are extremely poor (who need more aid) and disadvantage those from middle class families that need a small amount of aid, but not much.

That last paragraph describes the three primary data points used by the college scorecard. That doesn't make the data that was used bad, but it does mean that we should pay attention to the incentives that we create in rating systems and how to actively counter act them. For example, colleges could get "credits" on their graduation rates by admitting more students with active risk factors, or by being able to report different data about why students don't complete a degree. Average earning data should take into account the earnings of a student's parents and seek to remove that variable, since a college is more important for the change it makes to a student.

In the end, a college isn't everything in a student's future. We shouldn't make schools own outcomes that depend on the students they choose to admit - instead we should look at how a college bends an incoming class's future toward job satisfaction, strong connections with peers, impact towards social good, and ultimate life happiness. Is that data available, truly? I doubt it.